The Symbiosis of Deep Learning and Differential Equations (DLDE)

NeurIPS 2021 Workshop

Introduction

The focus of this workshop is on the interplay between deep learning (DL) and differential equations (DEs). In recent years, there has been a rapid increase of machine learning applications in computational sciences, with some of the most impressive results at the interface of DL and DEs. These successes have widespread implications, as DEs are among the most well-understood tools for the mathematical analysis of scientific knowledge, and they are fundamental building blocks for mathematical models in engineering, finance, and the natural sciences. This relationship is mutually beneficial. DL techniques have been used in a variety of ways to dramatically enhance the effectiveness of DE solvers and computer simulations. Conversely, DEs have also been used as mathematical models of the neural architectures and training algorithms arising in DL.

This workshop will aim to bring together researchers from each discipline to encourage intellectual exchanges and cultivate relationships between the two communities. The scope of the workshop spans topics at the intersection of DL and DEs, including theory of DL and DEs, neural differential equations, solving DEs with neural networks, and more.

Important Dates

| Submission Deadline (Extended) | September 24th, 2021 - Anywhere on Earth (AoE) |

| Final Decisions | October 17th, 2021 - AoE |

| Workshop Date | December 14th, 2021 - AoE |

Call for Extended Abstracts

We invite high-quality extended abstract submissions on the intersection of DEs and DL. Some examples (non-exhaustive list):

- How can DE models provide insights into DL?

- What families of functions are best represented by different neural architectures? [8]

- Can this connection guide the design of new neural architectures? [3, 4, 13]

- Can DE models be used to derive bounds on generalization error? [12]

- What insights can DE models provide into training dynamics? [6, 7]

- Can these insights guide the design of weight initialization schemes?

- How can DL be used to enhance the analysis of DEs? [1, 2]

- Solving high dimensional DEs (e.g., many-body physics, multi-agent models, …) [5, 10, 15]

- Solving highly parameterized DEs [9]

- Solving inverse problems [11, 14]

- Solving DEs with irregular solutions (exhibiting e.g., singularities, shocks, …)

Submission:

Accepted submissions will be presented during joint poster sessions and will be made publicly available as non-archival reports, allowing future submissions to archival conferences or journals.

Exceptional submissions will be either selected for four 15-minute contributed talks

or eight 5-minute spotlight oral presentations.

Submissions should be up to 4 pages excluding references, acknowledgements, and supplementary material, and should be DLDE-NeurIPS format and anonymous. Long appendices are permitted but strongly discouraged, and reviewers are not required to read them. The review process is double-blind.

We also welcome submissions of recently published work that is strongly within the scope of the workshop (with proper formatting). We encourage the authors of such submissions to focus on accessibility to the wider NeurIPS community while distilling their work into an extended abstract. Submission of this type will be eligible for poster sessions after a lighter review process.

Authors may be asked to review other workshop submissions.

Please submit your extended abstract to this address.

If you have any questions, send an email to one of the following: [luca.celotti@usherbrooke.ca, poli@stanford.edu]

Schedule

(EST) Morning

- 06:45 : Introduction and opening remarks

- 07:00 : Invited Talk 1 - Weinan E - Machine Learning and PDEs

- 07:45 : Spotlight Talk 1 - NeurInt-Learning Interpolation by Neural ODEs

- 08:00 : Spotlight Talk 2 - Neural ODE Processes: A Short Summary

- 08:15 : Invited Talk 2 - Neha Yadav - Deep learning methods for solving differential equations

- 09:00 : Coffee Break

- 09:15 : Spotlight Talk 3 - GRAND: Graph Neural Diffusion

- 09:30 : Spotlight Talk 4 - Neural Solvers for Fast and Accurate Numerical Optimal Control

- 09:45 : Poster Session 1 - GatherTown room

- 10:30 : Invited Talk 3 - Philipp Grohs - The Theory-to-Practice Gap in Deep Learning

- 11:15 : Lunch Break

(EST) Afternoon

- 13:45 : Spotlight Talk 5 - Deep Reinforcement Learning for Online Control of Stochastic Partial Differential Equations

- 14:00 : Spotlight Talk 6 - Statistical Numerical PDE : Fast Rate, Neural Scaling Law and When it’s Optimal

- 14:15 : Coffee Break

- 14:30 : Poster Session 2 - GatherTown room

- 15:15 : Invited Talk 4 - Anima Anandkumar - Neural operator: A new paradigm for learning PDEs

- 16:00 : Spotlight Talk 7 - HyperPINN: Learning parameterized differential equations with physics-informed hypernetworks

- 16:15 : Spotlight Talk 8 - Learning Implicit PDE Integration with Linear Implicit Layers

(EST) Night

- 23:00 : Panel discussion - Solving Differential Equations with Deep Learning: State of the Art and Future Directions

- 24:00 : Final Remarks

Invited Speakers

Neha Yadav (confirmed) is an assistant professor at the National Institute of Technology Hamirpur. Her research focuses on using neural networks and other machine learning techniques to solve differential equations, and exploring new optimization algorithms such as harmony search algorithms or particle swarm optimization. In addition to her many publications, she is a co-author of ''An Introduction to Neural Network Methods for Differential Equations'', the first textbook on the subject. [Webpage]

Philipp Grohs (confirmed) is a professor at the University of Vienna. His research interests lie in approximation theory and computational harmonic analysis. In addition, he is known for his work on numerical methods for PDEs, and has made important contributions to the development and analysis of machine learning algorithms for the numerical approximation of high-dimensional PDEs. Of particular notice is his theoretical work on error estimates for deep network approximations to the solutions of PDEs, including his proofs that neural networks overcome the curse of dimensionality in these applications. [Webpage]

Weinan E (confirmed) is a professor in Applied and Computational Mathematics at Princeton University. His current research interests lie at the intersection of mathematics, physics, and machine learning. He has made important contributions to the theoretical foundations of deep neural networks, exploring questions on training dynamics and generalization. Additionally, he has driven the development of machine learning algorithms for use in computational science, including solution methods for complicated PDE problems and techniques for incorporating mathematical models into data-driven approaches. [Webpage]

Anima Anandkumar (confirmed) holds dual positions in academia and industry. She is a Bren professor at Caltech CMS department and a director of machine learning research at NVIDIA. At Caltech, she is the co-director of Dolcit and co-leads the AI4science initiative, along with Yisong Yue. She has spearheaded the development of tensor algorithms, first proposed in her seminal paper, which enable efficient processing of multidimensional and multimodal data. Her contributions to Physics Informed Deep Learning, such as the Fourier Neural Operator network for solving parametric PDEs, have sparked great interest in the community. [Webpage]

Recordings

The workshop will be broadcasted via Zoom and poster sessions will be on Gathertown. We will upload the recordings on YouTube.

Organizers

Columbia University

University of Toronto, Vector Institute, NVIDIA

Université de Sherbrooke

Google Research

University of Guelph

University of Oxford

Ontario Tech University

University of Tokyo, RIKEN

Rutgers University

Stanford University

University of California, Berkley

Rutgers University

Attending the workshop

Poster sessions in GatherTown

Expert panel session

Acknowledgments

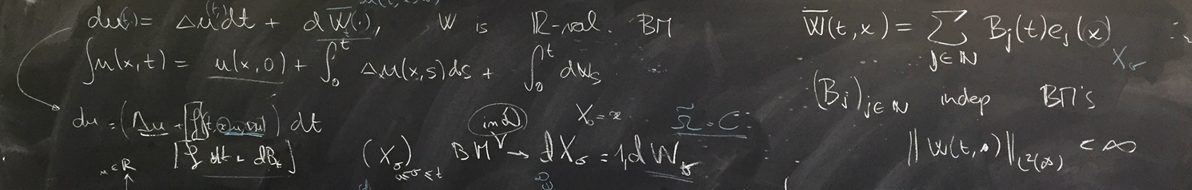

Thanks to visualdialog.org for the webpage format. Thanks to whatsonmyblackboard for the cozy blackboard photo.

References

[2] D. Kochkov, J.A. Smith, A. Alieva, Q. Wang, M.P. Brenner, and S. Hoyer Machine learning–accelerated computational fluid dynamics. PNAS, 2021.

[3] E. Haber and L. Ruthotto Stable architectures for deep neural networks. IOPScience, 2017.

[4] R.T.Q. Chen, Y. Rubanova, J. Bettencourt, D. Duvenaud Neural Ordinary Differential Equations. NeurIPS, 2018.

[5] G. Carleo, M. Troyer Solving the quantum many-body problem with artificial neural networks. Science, 2017.

[6] P. Chaudhari, S. Soatto Stochastic gradient descent performs variational inference, converges to limit cycles for deep networks. ICLR, 2018.

[7] Q. Li, C. Tai, W. E Stochastic Modified Equations and Adaptive Stochastic Gradient Algorithms. ICML, 2017.

[8] W. E, C. Ma & L. Wu The Barron Space and the Flow-Induced Function Spaces for Neural Network Models. Constructive Approximation, 2021.

[9] Z. Li, N. Kovachki, K. Azizzadenesheli, B. Liu, K. Bhattacharya, A. Stuart, A. Anandkumar Fourier Neural Operator for Parametric Partial Differential Equations. ICLR, 2021.

[10] J. Sirignano, K. Spiliopoulos DGM: A deep learning algorithm for solving partial differential equations. Journal of Computational Physics, 2018.

[11] L. Lu, X. Meng, Z. Mao, G.E. Karniadakis DeepXDE: A Deep Learning Library for Solving Differential Equations. Society for Industrial and Applied Mathematics, 2021.

[12] S. Mishra, R. Molinaro Estimates on the generalization error of physics-informed neural networks for approximating a class of inverse problems for PDEs. IMA Journal of Numerical Analysis, 2021.

[13] S. Bai, J.Z. Kolter, V. Koltun Deep Equilibrium Models. NeurIPS, 2019.

[14] N. Yadav, K.S. McFall, M. Kumar, J.H. Kim A length factor artificial neural network method for the numerical solution of the advection dispersion equation characterizing the mass balance of fluid flow in a chemical reactor. Neural Computing and Applications, 2018.

[15] C. Beck, S. Becker, P. Grohs, N. Jaafari, A. Jentzen Solving the Kolmogorov PDE by Means of Deep Learning. Journal of Scientific Computing, 2021.